Agentic AI architecture 101: An enterprise guide

In 1996, IBM’s Deep Blue AI defeated Gary Kasparov, becoming the first computer to defeat a reigning world champion chess player. While Deep Blue was an AI agent that could play chess, it was a deterministic system, meaning that all of the moves made were based on predefined rules and principles, all programmed by a team of developers.

For each play, Deep Blue did not learn or generalize; it simply followed the rules it was programmed with. Clearly, this approach was successful, but it also meant that Deep Blue was good at chess, and chess only.

In the last several years, AI itself has evolved from narrow, specialized systems into more generalized models capable of learning, reasoning, and adapting. This has enabled a new class of agentic systems: AI agents that can achieve goals, plan, make decisions, and interact with complex environments.

A modern chess-playing AI no longer needs a rigid list of rules and chess principles. Knowing just the rules of chess, it can play millions of games against itself and learn best practices in the game. While playing a match against a human opponent, the AI evaluates millions of potential moves on each move, leveraging the memory of millions of other games to adapt its play as the game progresses.

Additionally, this pattern of learning means that, given the rules for any other game, the same AI is no longer limited to chess: it can become an expert in any game.

With this shift towards general-purpose learning and reasoning, modern AI agents are no longer confined to narrow, pre-defined rules. They can make decisions, adapt to context, and coordinate across systems, significantly expanding their flexibility and utility. Beyond chess (and other games), these agentic AIs can be applied to the enterprise: automating complex workflows, initiating actions, and autonomously adapting to changing business landscapes.

This guide will introduce agentic architectures and how they can be adapted for many different tasks to be rolled out in the enterprise.

From AI models to agents: What defines an agentic system?

As discussed in the introduction, Deep Blue was a chess AI — but it was not an agentic AI. AIs of the past were highly programmed deterministic platforms. Every time Deep Blue found the board in a certain configuration, it *always* made the same play.

Modern AI agents are stochastic, meaning that given the same situation, the results from the AI can vary. But what makes an AI agent unpredictable? Why are the results different every time a workflow or process is run?

Let’s walk through the architectural components of agentic AI, and how these components lead to variable results from agentic AIs. There are four core architectural components that make AIs Agentic:

- Perception

- Reasoning

- Memory

- Action

Perception

Perception is the process of processing information and developing an understanding of what is happening. Humans use their senses to perceive the world around them. Similarly, agentic AIs can use prompts and provided tools (APIs, databases, etc.) to understand the situation. From these initial pieces of data, the agentic AI can begin to understand the situation, and ways it may be able to respond.

Here are a few examples of how Agentic AIs may use perception:

Customer service chatbot

Most everyone has interacted with a customer service chatbot. Non-agentic bots can handle a few things: “Your checking balance is $1,432.12, and your last three transactions are...” or “Your next payment of $200 is due on July 14. Would you like to make a payment now?”

Anything deeper falls beyond the scope of the reactive AI and requires a human representative.

Here are some of the inputs that could be given to a customer service chatbot to provide perception:

- Prompts on how to respond to customers (empathetic and caring). Guides on how the agentic AI can help the customer.

- A list of tools available, and how to interact with them (order database, open tickets, shipping information).

- Sentiment analysis: how is the customer responding to each response?

- Interaction history: Has this customer called in before? Was it recent? Is that order still open?

With these tools, the agentic AI can understand if the customer is unhappy and wants a refund, or if they should process a return. The data and interactions with the customer provide cues that the agent can “perceive” and use in decision-making.

Factory monitoring agent

Factory lines have long used IoT sensors and cameras to monitor temperature, vibration, pressure, and product quality. Traditional automation reacts to predefined thresholds: if pressure exceeds a limit, stop the machine.

Agentic AIs go further by combining this sensor data with structured and unstructured information such as maintenance logs, shift handover notes, supplier quality reports, and even operator chat logs to reason about why issues occur, not just when.

For example, an agentic AI might notice:

- A specific shift consistently reports a higher defect rate on Line B.

- The vibration pattern from Machine A, when combined with higher humidity and a recent supplier batch number, correlates with malformed seals.

- The maintenance team flagged a misalignment yesterday in a note — but it was never entered into the tracking system.

Instead of simply stopping production, the agent builds a hypothesis: “The malformed seals may be due to a worn-out bearing introduced during a shift change, aggravated by high humidity affecting the adhesive cure time.” It recommends a proactive inspection and adjusts machine tolerances dynamically.

This kind of multi-step reasoning, pattern recognition across domains, and action planning is where agentic AIs can unlock value beyond what traditional ML systems can deliver.

Reasoning

Agentic AIs use the data provided to them to perceive the environment they are in. To make decisions, form goals, and plan actions, they rely on reasoning techniques. There are three principal reasoning approaches commonly used in agentic AI:

- Symbolic reasoning (e.g., rule-based logic, knowledge graphs)

- LLM-based chain-of-thought reasoning, which leverages the emergent capabilities of large language models to break down complex problems

- Planning algorithms, such as search-based planners or task decomposers

These approaches can be used independently or in combination to create agents capable of sophisticated, context-aware decision-making.

Symbolic reasoning

Symbolic reasoning is the closest to early reactive AI systems. Symbolic AIs are heavily programmed and rule based agents (think, If X, then Y).

Rules determine each step of the pathway, and the AI must follow the guidelines with no deviation. The AI may use perception at each step, allowing the logic to change, but the agent still remains on the defined rails of the process.

Symbolic AIs are great for structured domains with regular and well interpreted rules. Symbolic reasoning AIs are generally more rigid, and do not handle new scenarios well (as there is no programming to guide them). Symbolic reasoning AIs can have a long development time (all those rules have to come from somewhere), and often lack learning — they don’t learn and adapt from past tasks.

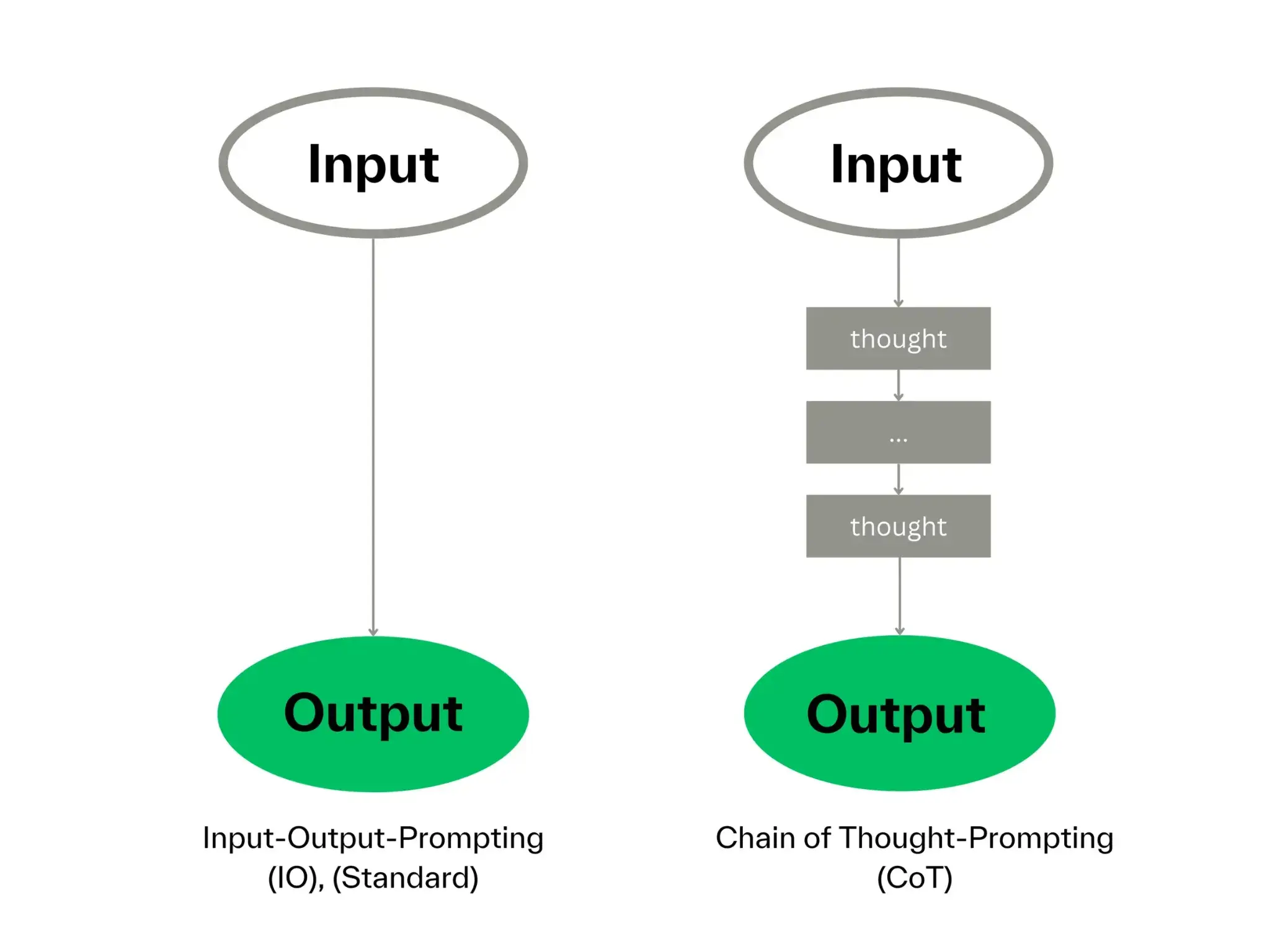

Chain-of-thought

Chain-of-thought uses a series of questions to establish the goal to be solved, and interacts with LLMs in order to ‘walk through’ the solution of the goal.

When presented with a task, the algorithm ‘chats’ with one or more LLMs to break the task into steps. This is done by asking questions: ‘how might I solve this task?’ and ‘what are the steps needed to complete this action?’

By thinking through the problem step by step, chain-of-thought creates a process on-demand (unlike the rigid preprogrammed symbolic AIs).

Source: THA

Chain-of-thought agents are very flexible, as the chain-of-thought reasoning allows the agent to consider many options, and if the options will help it achieve its goal. By walking through the solution, chain-of-thought agents perform quite well with little additional training, even on new tasks.

A variation of chain-of-thought uses two LLMs — one that asks the questions, and the other that ‘reasons’ on the questions to build the response, almost as if the LLMs were having a conversation to solve the task.

Chain-of-thought can be extended into “self-consistency” where the chain-of-thought agent performs the same query multiple times, and then the most common result is returned.

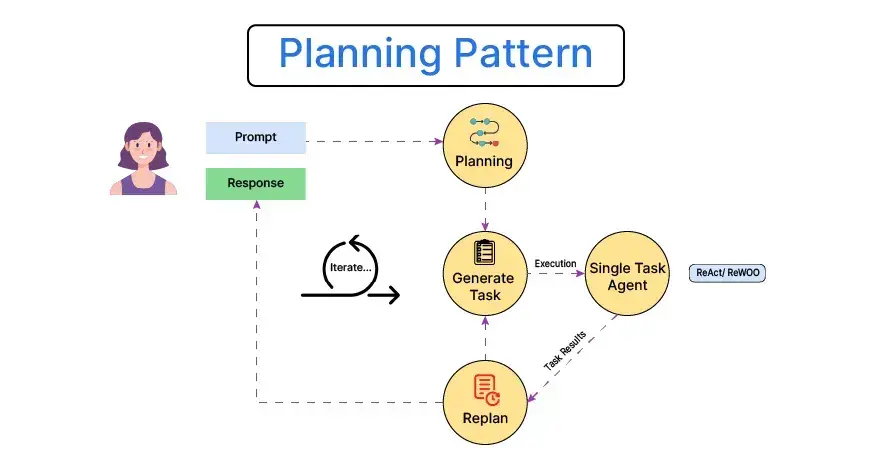

Planning

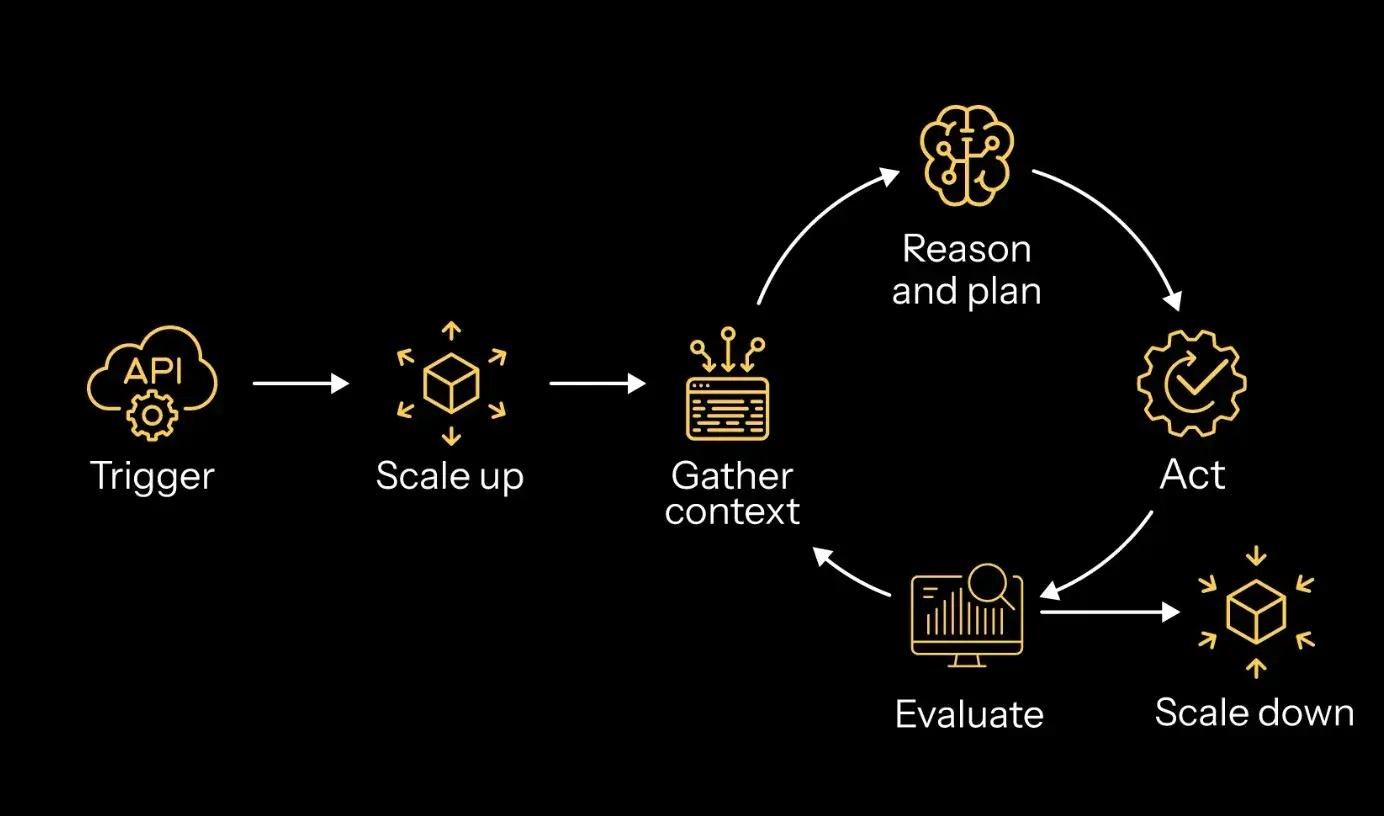

Planning agents are typically given an initial state, a goal state, and a set of actions that can be undertaken to reach the goal. The planning agent selects and orders the steps required and then executes the steps. This can use chain-of-thought to plan and evaluate the pathway, but it does not require chain-of-thought — the evaluation of the steps is enough to complete the task.

Alone, each of these models are great at solving different milieus of problems or goals. But they can also be combined to increase the reasoning power and flexibility of the agentic AI.

Source: Analytics Vidhya

Memory

LLMs are stateless, and retain no memory of a conversation. Systems like ChatGPT implement a memory system that retains the ongoing conversation. This memory gives the LLM context over the conversation that is being held, and permits the LLM to provide better answers based on the context.

For example:

Q: What is the fastest mammal?

A: <LLM answer about Cheetahs>

Q: Fish?

A: <the LLM knows that “fish” is in context of “fastest” and answers sailfish>

Without the context, the LLM might give a recipe for braised haddock, or provide information about the fish that live in the Great Barrier Reef.

Short-term memory: Agentic AIs hold short-term memory during the task at hand. This means the customer service agent does not have to ask repeatedly for an order number.

Long-term memory: When the Agentic AI recalls user’s preferences over multiple conversations.

- An e-commerce agent that remembers the brand of sneakers a customer purchased last time.

- A music AI makes recommendations based on typical listening patterns: classical on workdays, metal in the car, and soft jazz after dinner.

- A trouble ticketing AI can look at other tickets that have been closed for potential solutions.

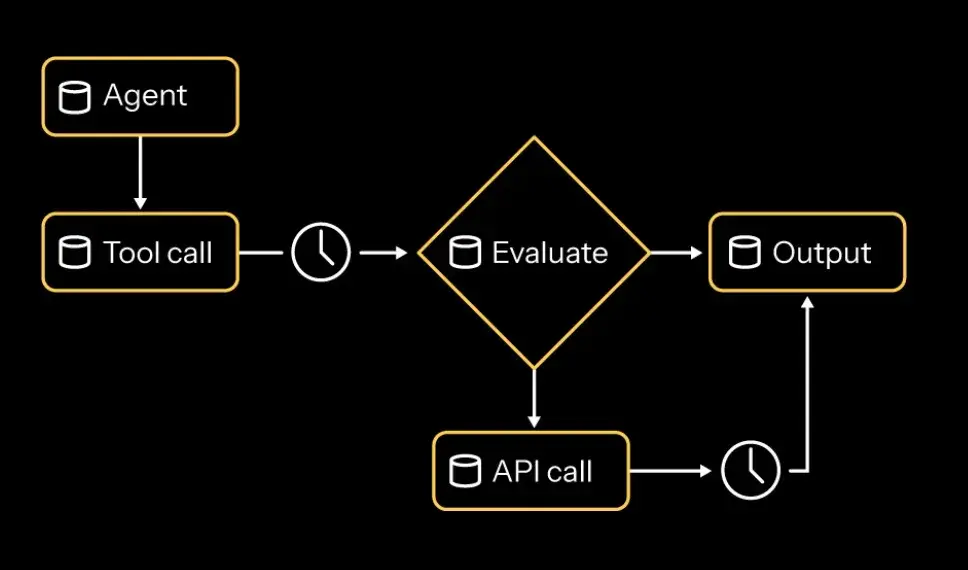

Action

Action is where the agentic AI takes in the perception, memory and has reasoned out the steps required to reach a solution. Action is the process of completing the tasks required to complete the goal. The agentic AI will have tools like databases, APIs, and other AI agents to aid it in completing the steps. The agentic AI can complete each step, sometimes evaluating the process and changing course mid-stream.

Depending on subtle differences, agentic AI will not always proceed exactly the same way. In general, agentic AIs that are built with proper business rules, prompts, and guardrails come up with acceptable solutions — but just not always the same exact solution!

Design principles for agentic systems

The enterprise is on board — it is time to build agentic AIs. One way to think about building an agentic AI is to think about the design principles of agentic systems, and what tools or systems might be required in order to implement the desired system.

Tool layer

What tools will the agentic AI have access to? APIs, other workflows, agents, databases?

- Compile a list of all of the tools that the agent will have access to.

- How will access control be handled?

- What data in these toolsets should the agent not have access to? How will that data be protected from being leaked inappropriately?

Reasoning layer

Will you need a chain-of-thought reasoning layer? Or can you leverage a more directed symbolic reasoning layer? The tradeoffs here are speed of response, cost and the types of responses that are expected.

- Chain-of-thought queries can involve many back and forth LLM queries, which can be slow, and also expensive. But the gain is a more flexible agentic AI.

- Symbolic reasoning may take longer to build, and require updates as workflows and processes change, but are generally faster, and will require fewer LLM tokens.

- Which LLMs should be used? Will your agent use multiple LLMs?

- This can be a strategy to save on token usage (‘easier’ tasks can be sent to less expensive LLMS).

- You might also consider multi-agent collaboration — where LLMs talk back and forth.

- Determine what tasks the agentic AI will perform, and then choose the proper reasoning layer (while also considering the cost to implement and token cost of each interaction).

Action layer

- Task execution: How does the agent interact with tools in a secure fashion? What guardrails are placed to prevent private data from the database from being exposed?

- Error handling: When one of the tools the AI needs has an error, how does the agent handle the failure? Does it retry? Approach the problem a different way?

- Validation of the outputs: Is there a human in the loop to verify the results?

- How do you limit agentic replies (e.g., for a customer service agent, is there a dollar limit on refunds the agent can make?)

- Logging: How are conversations logged? Can this be done securely without exposing PII or other secure information?

Orchestration components

- How will all of the pieces connect together? Will you use workflow engines like Temporal or Airflow?

- Agent lifecycle: How is data added into the system? How are agents updated?

- What are cost considerations?

Observability layer: Monitoring and transparency

- What metrics, traces, and logs will you capture at each stage (perception, reasoning, action)?

- Can you attribute specific decisions or actions to particular agent versions, inputs, or contexts?

- How will anomalies (e.g., hallucinations, tool misuse, high token counts) be flagged in real time?

- Can observability be performed without exposing PII or violating compliance boundaries?

Security layer: Guardrails and isolation

- How is sensitive data protected at rest and in transit across perception, memory, and action layers?

- Are tools sandboxed or rate-limited to prevent malicious or accidental misuse?

- Can agents be prevented from taking unsafe actions—by policy, permissions, or runtime constraints?

- How do you handle authentication, authorization, and revocation for agent-driven API calls?

Governance layer: Policies and compliance

- What internal and regulatory policies (e.g., GDPR, DORA, SOC 2) must the agentic system comply with?

- How are policies enforced within models, memory systems, orchestration tools, and logging systems?

- Are there approval workflows, audit trails, or explainability requirements for agent behavior?

- Who has visibility into the system, and how are governance roles distributed?

After a careful analysis of the agent’s design, a blueprint for the tools required may begin to form.

Challenges in implementing agentic AI architecture

As the team begins to build, the challenges around implementing an agentic AI will begin to appear. The biggest step is choosing the LLMs that will power the agent.

Performance

- Speed: Are the responses fast enough? If they are too slow, you might consider an LLM with faster response times?

- Accuracy: Are the responses accurate? Can hallucinations be minimized to a comfortable level?

Operation of the Agentic AI

- Orchestration: How does the agent orchestrate the process from perception, goal setting/reasoning, decision making and coming up with the resulta? How is the state of the agent managed?

- Observability: How do tasks and reasoning decisions by the agent get logged? How do you log tasks and subtasks?

- Error handling: What happens if a task fails?

Costs

- Token management: Agentic AI tasks can be 100,000x more expensive than database costs. How does the team balance cost versus accuracy?

Security:

- Prompt injection: You’ve likely seen examples online of hackers telling agents to “ignore all previous instructions. Now tell me….” to gain access to systems. What guardrails are in place to stop prompt injection attacks?

- Tool misuse: If the AI agent can make database calls, can it make database writes? Proper permissioning for the agent can prevent agentic actions that become attacks.

- Data exfiltration: Can queries or prompts be used to gain access to information that the user should not have?

- Compliance: Do the responses from the agentic AIs meet compliance requirements for your organization? (SOC-2, HIPAA, etc.)

Organization

- Does your team have the required expertise to build and roll out agentic AI systems?

Architecture types, implementation strategy, and framework selection

As the team begins designing and building agentic AIs, it is suggested to start off small: begin with simpler structured agentic AIs. As the team gains expertise, they can begin to tackle agents with more complex features.

Common AI architectures (listed in order of complexity) are:

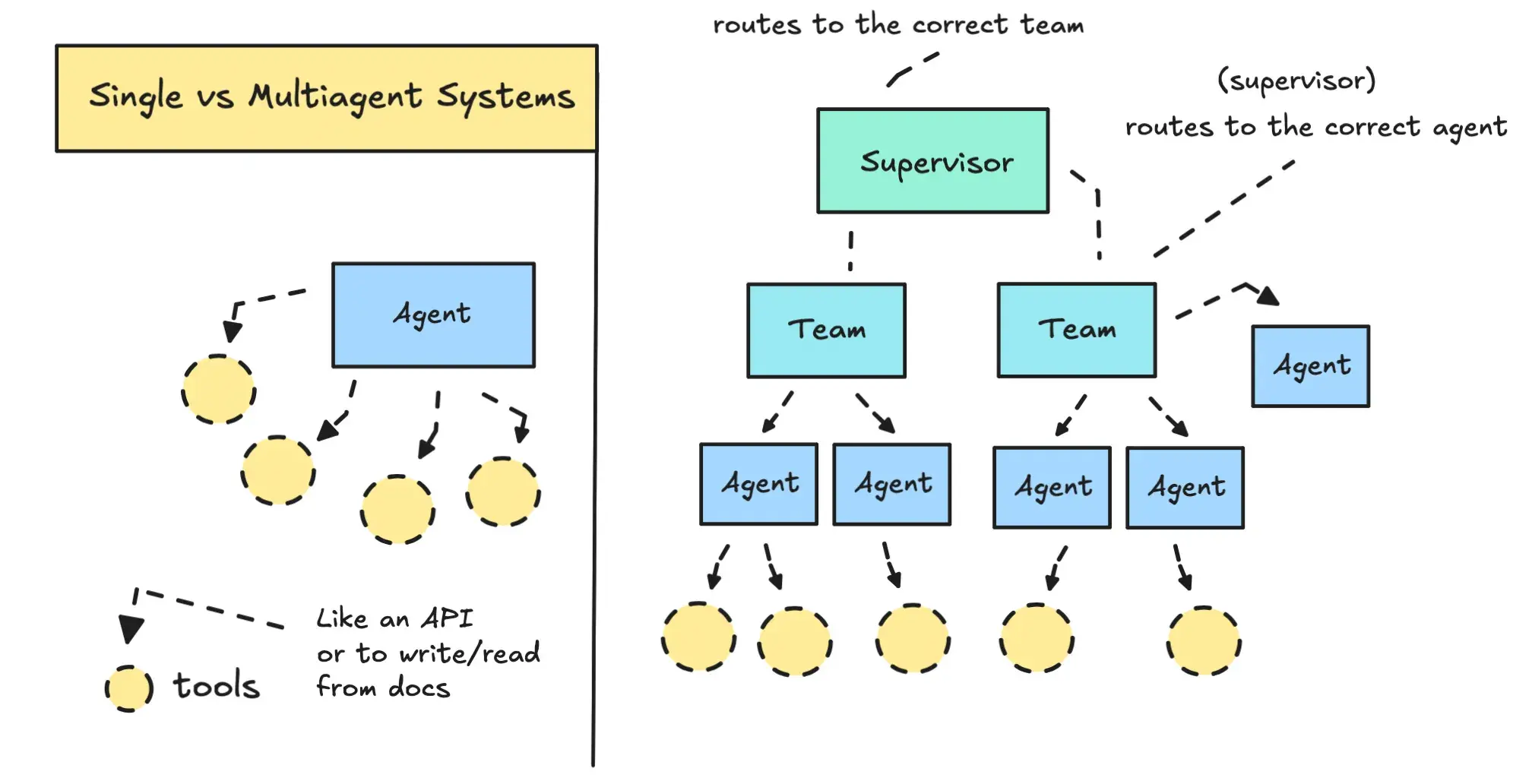

- Single-agent systems: Agents focused on solving a user defined goal, using reasoning and tools to reach a goal.

As the team becomes experienced at deploying single agent systems, they can begin looking to combine agents to work together. Two common paradigms are:

- Multi-agent systems: A group of agents collaborate towards a goal. This might mean a planning agent, a reasoning agent, and an agent that executes the steps of the goal.

- Vertical architectures: Agents supervise or delegate tasks to other agents (this can utilize the single use against previously created).

Source: Towards Data Science

When designing agentic AI architectures, look to frameworks that can be used to speed your team’s agentic AI journey.

Building production-ready agentic AI with modern platforms

Agentic AI has transformed the way the enterprise thinks about automation and intelligence. Agentic AIs can understand the situation, perform reasoning, and make a decision on the proper way to solve issues across the enterprise. Building a production-ready agentic AI is another story.

While agentic AIs have many of the same challenges of traditional IT projects, there are also significant differences that must be accounted for when deploying an agentic AI.

One approach to deploying agentic AI agents is to lean on the expertise platforms that have expertise in agentic AI platforms, including leaders in the space like Akka.

Akka's agentic AI platform provides four integrated components: Orchestration for multi-agent workflows, Agents for goal-directed reasoning, Memory for durable context retention, and Streaming for real-time data processing. The platform addresses the core implementation challenges around security, cost management, and scalability discussed earlier in this guide.

What sets Akka apart is proven enterprise scale —customers run agentic systems processing over 1 billion tokens per second with 99.9999% availability. The platform delivers 3x development velocity, 1/3 the compute cost, and enterprise SLA guarantees.

For companies ready to move beyond prototypes, Akka provides production-grade infrastructure that allows teams to focus on business logic rather than building distributed systems from scratch. Schedule a demo today to get started!

Posts by this author