What is Agentic AI?

The impact on your architecture

AI is a game changer, but it’s not enough.

LLMs can generate insights, but they’re unreliable, lack memory, and can’t take action. Moreover, most AI tools don’t integrate deeply with enterprise systems. Enterprises need a new approach: an AI-enabled digital workforce that can reason, adapt, and act intelligently over time. Enterprises need agentic AI.

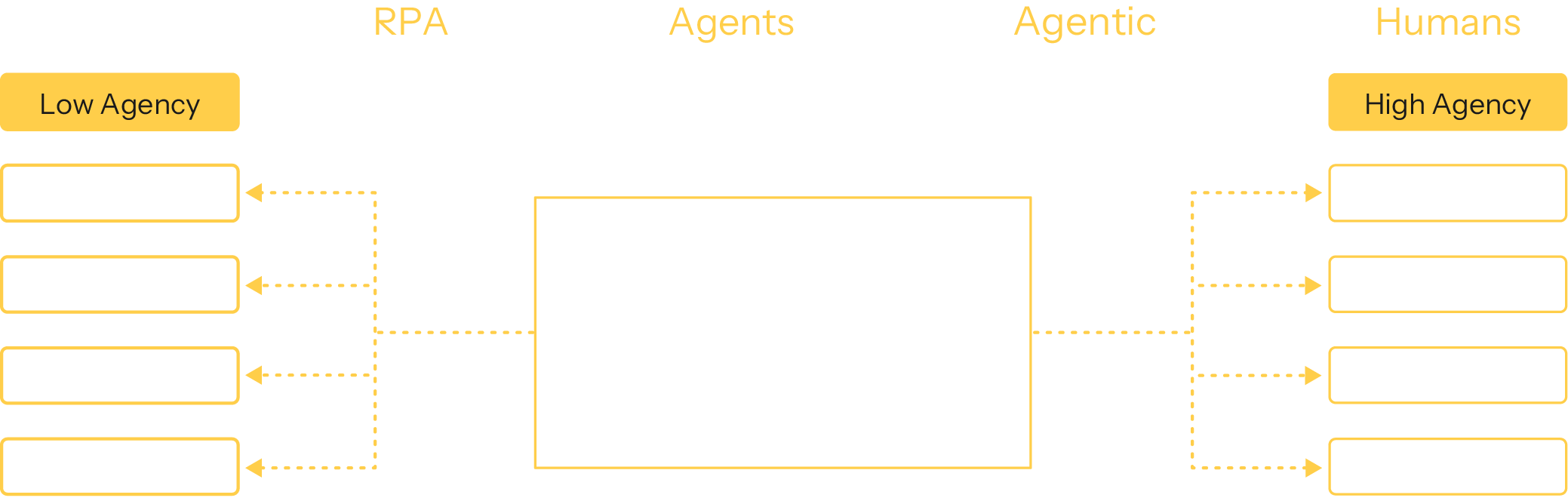

Assistants vs. agents

An agentic AI is an agent that can reason, plan, and act autonomously while being governed and controlled.

Unlike AI assistants, which primarily respond to user queries and follow predefined workflows, agentic AI can independently make decisions, set goals, and execute tasks while remaining aligned with human intent and constraints.

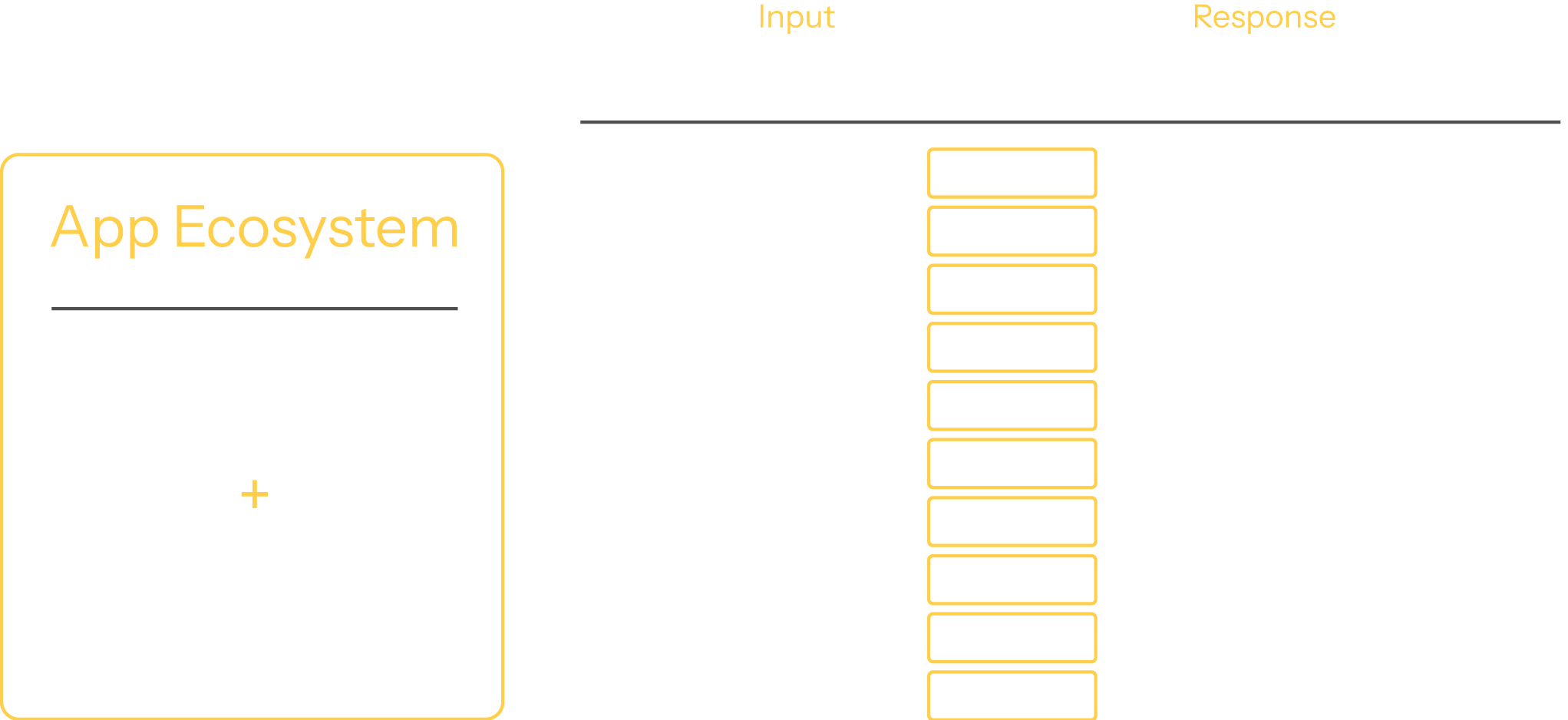

Agentic services integrate with existing applications to create a cohesive app ecosystem. This integration gives existing applications reasoning & planning capabilities, while giving agentic services real-world context and the ability to execute actions.

From transactions to conversations

Agentic AI services are a fundamental shift from the transaction-centered, request-response paradigm of traditional SAAS applications to a conversation-centered, iterative paradigm built around large language models (LLMs).

Large language models in agentic AI

At the core of AI agents are large language models (LLMs)—powerful neural networks trained on vast amounts of text to generate human-like responses. They excel at reasoning, summarization, and decision-making, making them a critical component of agentic AI.

LLMs have three fundamental limitations that require careful engineering to be effective in enterprise-grade agents:

- LLMs are slow and unreliable. Unlike traditional software, which operates in milliseconds, LLMs can take seconds per response and are prone to variability in output quality. Agents need orchestration layers to handle execution reliability, caching, and optimization.

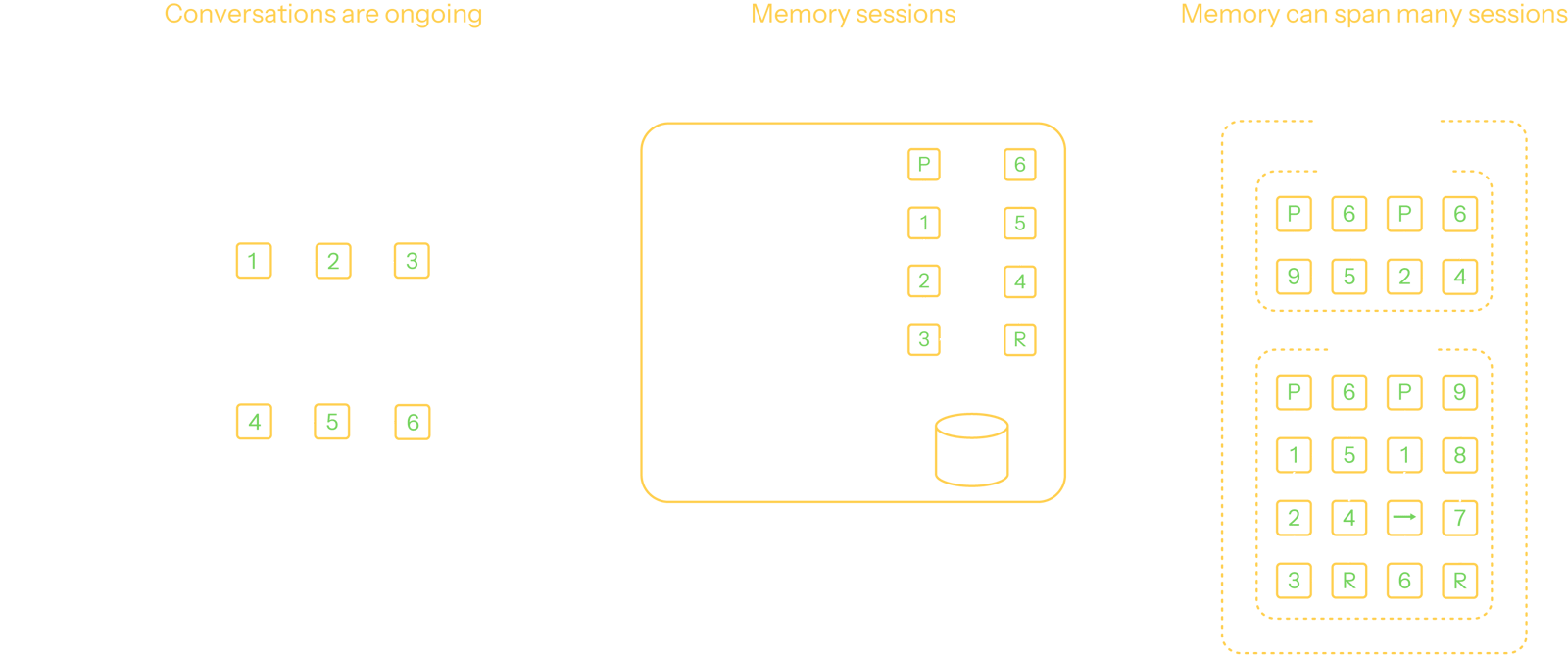

- LLMs are stateless. They don’t retain memory between interactions, meaning agents must incorporate a persistent memory system to track both short- and long-term context.

- LLMs have limited knowledge. They’re trained on public data and have fixed knowledge cutoffs, which means they can’t access up-to-date or proprietary information out of the box. Agents must be integrated with retrieval systems or private data sources to ground responses in real-time and domain-specific knowledge.

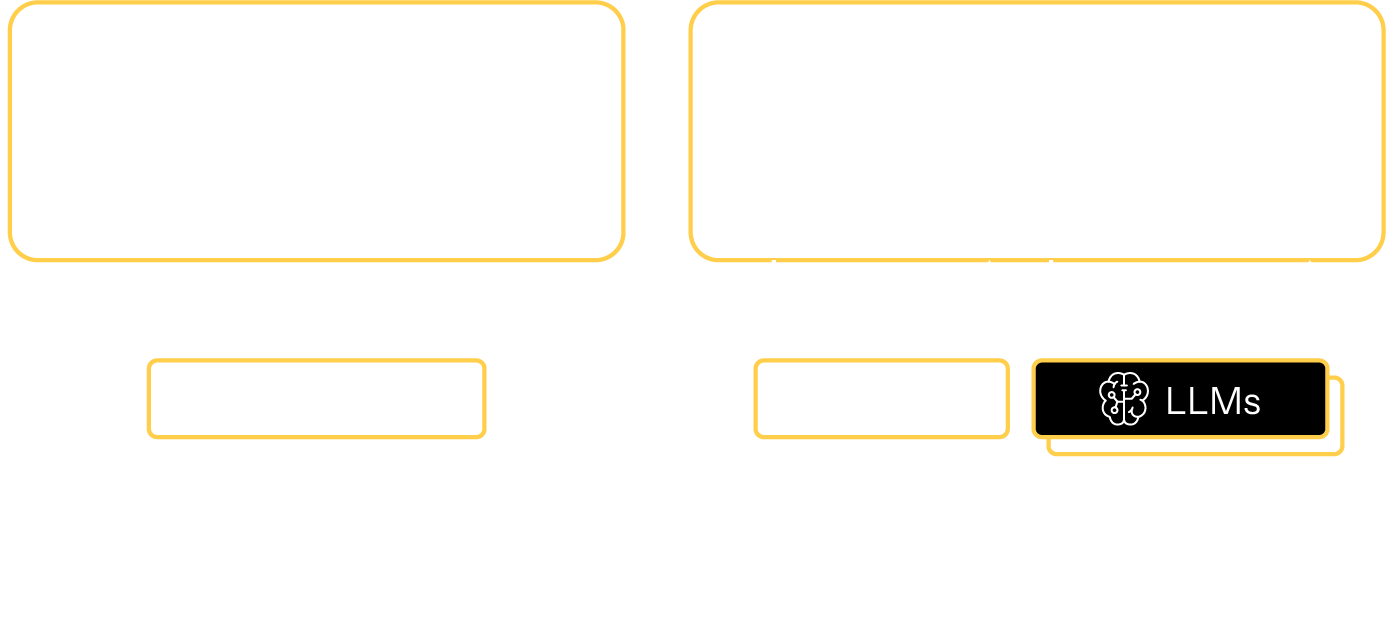

Giving LLMs context

Because LLMs are stateless and prone to unreliable responses, modern agentic systems don’t rely on them to answer standalone prompts. Instead, the majority of what an LLM sees in each interaction is context, not the original user input.

That context is carefully constructed by the agent itself—assembled from a combination of sources like recent conversational history, relevant documents retrieved via semantic search, up-to-date information from an event stream, and even structured data pulled from internal APIs. The agent’s job is to build the right prompt, enriching it with just enough context to guide the LLM toward a useful answer, without overloading it with irrelevant or noisy information.

This orchestration—figuring out what the LLM should know at every step—is one of the most important and underappreciated parts of building enterprise-grade AI agents. The quality of the output often depends less on the LLM itself and more on how well the system prepares the input.

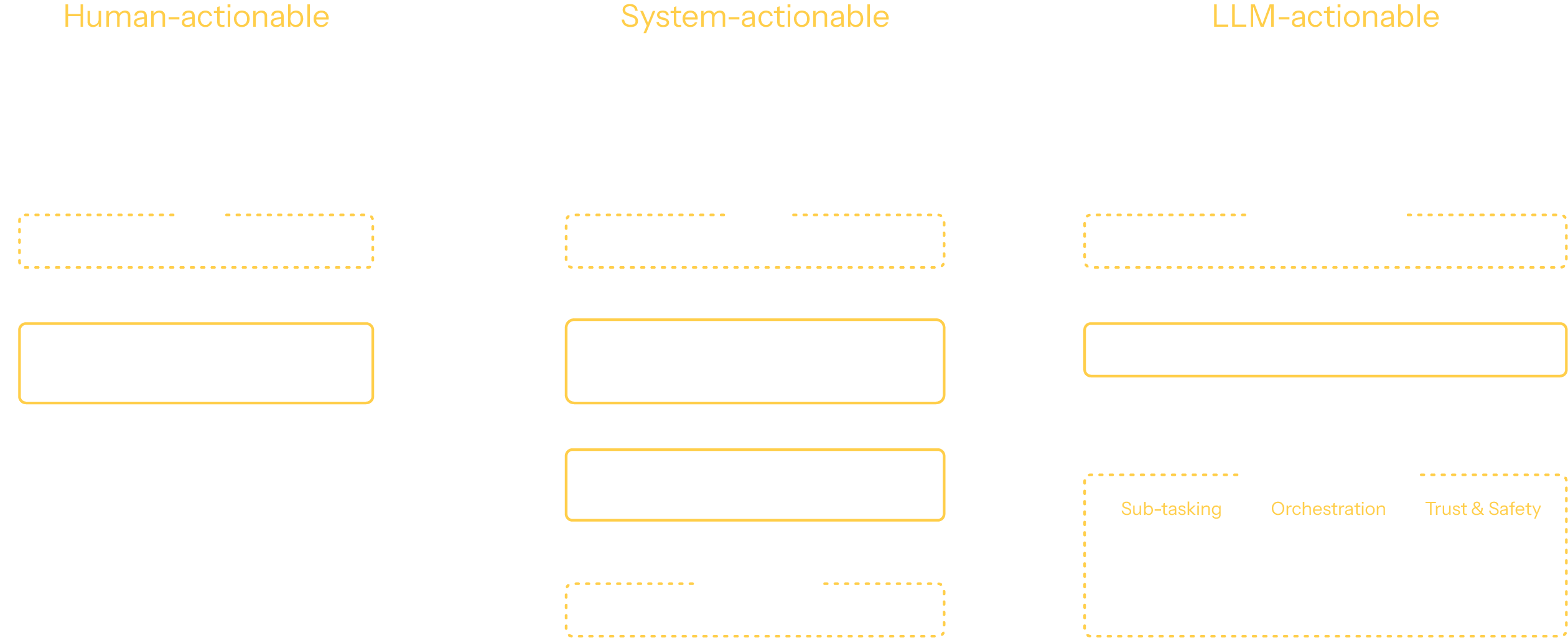

LLMs create actionable outputs

LLM outputs are processed by one of three entities: humans, systems, or another LLM. A human-actionable output would be an unstructured text or image that answers a specific prompt. A system-actionable output would be a function call, executable code, or structured data. Finally, an LLM-actionable output is a prompt and context that other LLMs can use as an input to further reasoning or task execution.

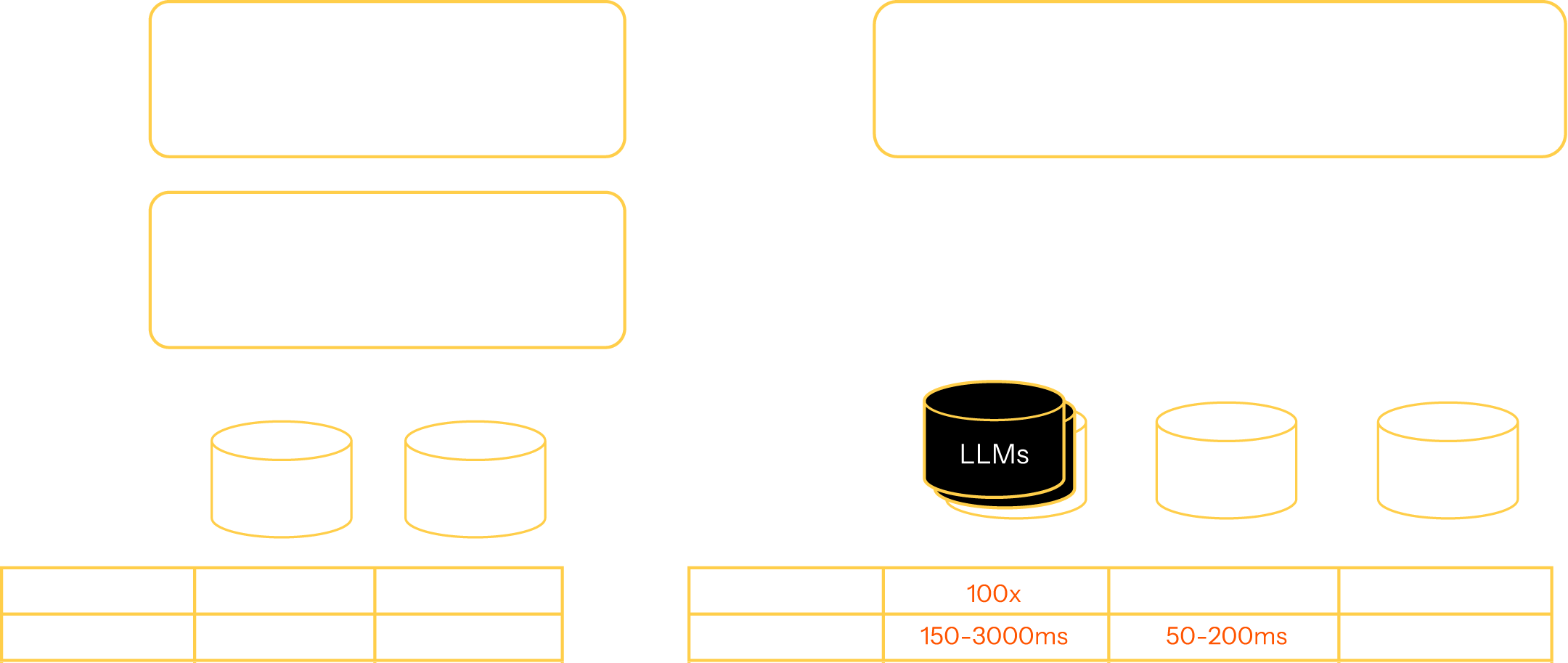

SaaS apps vs agentic AI: A shift in system design

Adding an LLM to a system introduces powerful new capabilities—reasoning, summarization, synthesis, decision-making—that traditional software can’t replicate. But unlocking those capabilities requires a shift in how systems are designed. The table below compares how traditional SaaS applications differ from agentic systems that harness the full power of LLMs.

| SaaS Applications | Agentic AI |

|---|---|

| Transaction-centered: short, stateless interactions | Conversation-centered: Long-lived, stateful interactions |

| Immediate response: system processes request and returns a result | Context-aware responses: system remembers past interactions to stay relevant |

| Minimal compute and memory per transaction | High compute and memory usage per conversation |

| Predictable scaling: More users = more transactions = predictable load | Unpredictable scaling: More users lead to exponentially higher workload due to longer interactions and agent-driven actions |

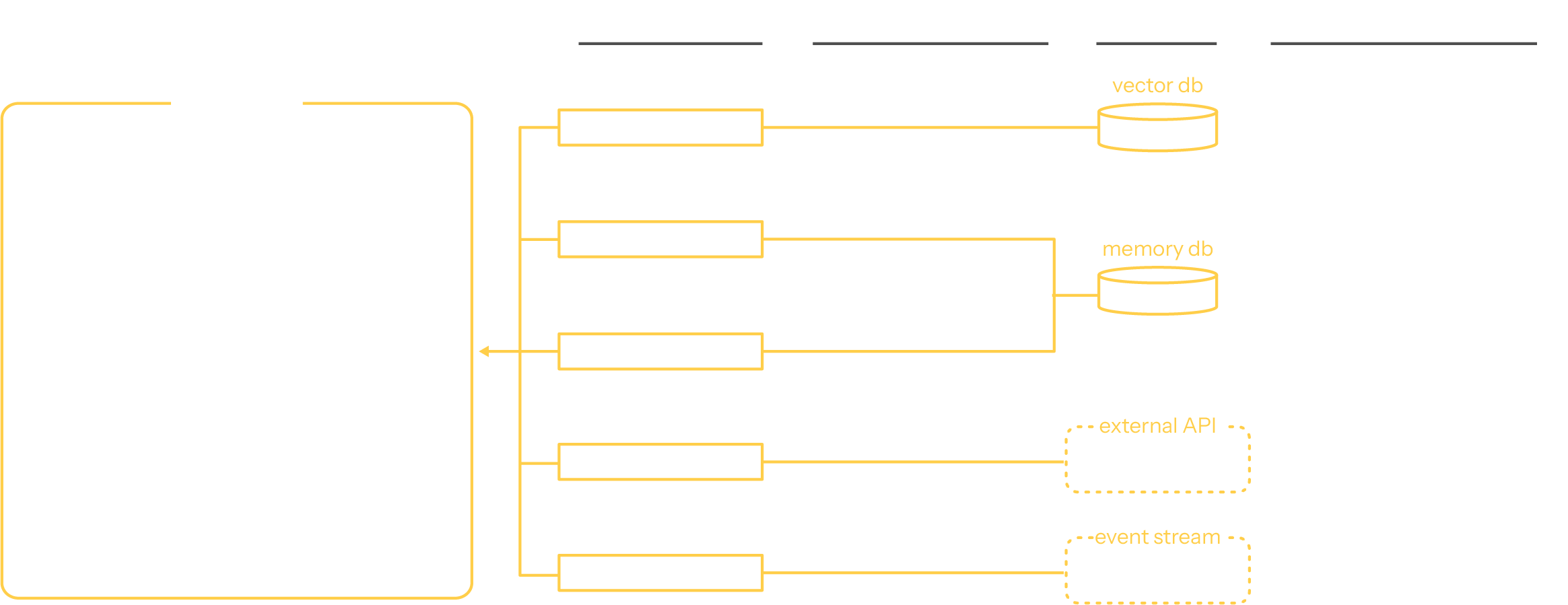

Agentic AI architecture

Traditional enterprise applications follow an N-tier architecture, designed for structured transactions and deterministic workflows. But agents operate in an entirely different paradigm—conversational, adaptive, and stateful. To support this, enterprises need an agentic tier (A-tier) architecture, which runs alongside the existing N-tier stack.

In the A-tier architecture, swarms of agents run autonomously, managing their own state and coordinating with each other. To function effectively, agents depend on A-tier infrastructure, which provides five critical capabilities: agent lifecycle management, agent orchestration, memory, streaming, and integrations.

This N+A-tier approach ensures that enterprises can harness the power of agents without disrupting their existing technology stack, enabling intelligent automation at scale.

Agentic tier capabilities

Durable workflows that manage long-running, multi-step processes, ensuring agent actions and LLM calls execute reliably, even in the face of hardware failures, timeouts, hallucinations, or restarts.

Persistent short- and long-term memory to maintain context across conversational interactions.

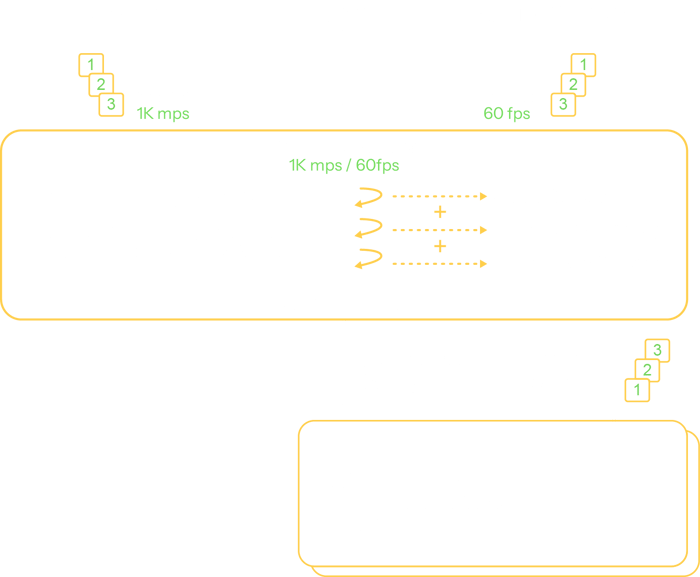

A streaming architecture enables agents to process and respond quickly to high data volumes such as video, audio, IoT data, metrics, and event streams, gracefully handling LLM latency and ensuring responsiveness. Streaming is also critical for supporting ambient agents—AI agents that continuously monitor event streams and only respond when necessary, enabling proactive, context-aware actions rather than reactive, chat-style interactions.

Native connectivity with enterprise APIs, databases, and external tools to extend agent capabilities, leveraging established standards like OpenAPI and emerging ones like the Model Context Protocol, which define how agents provide context and tools to LLMs.

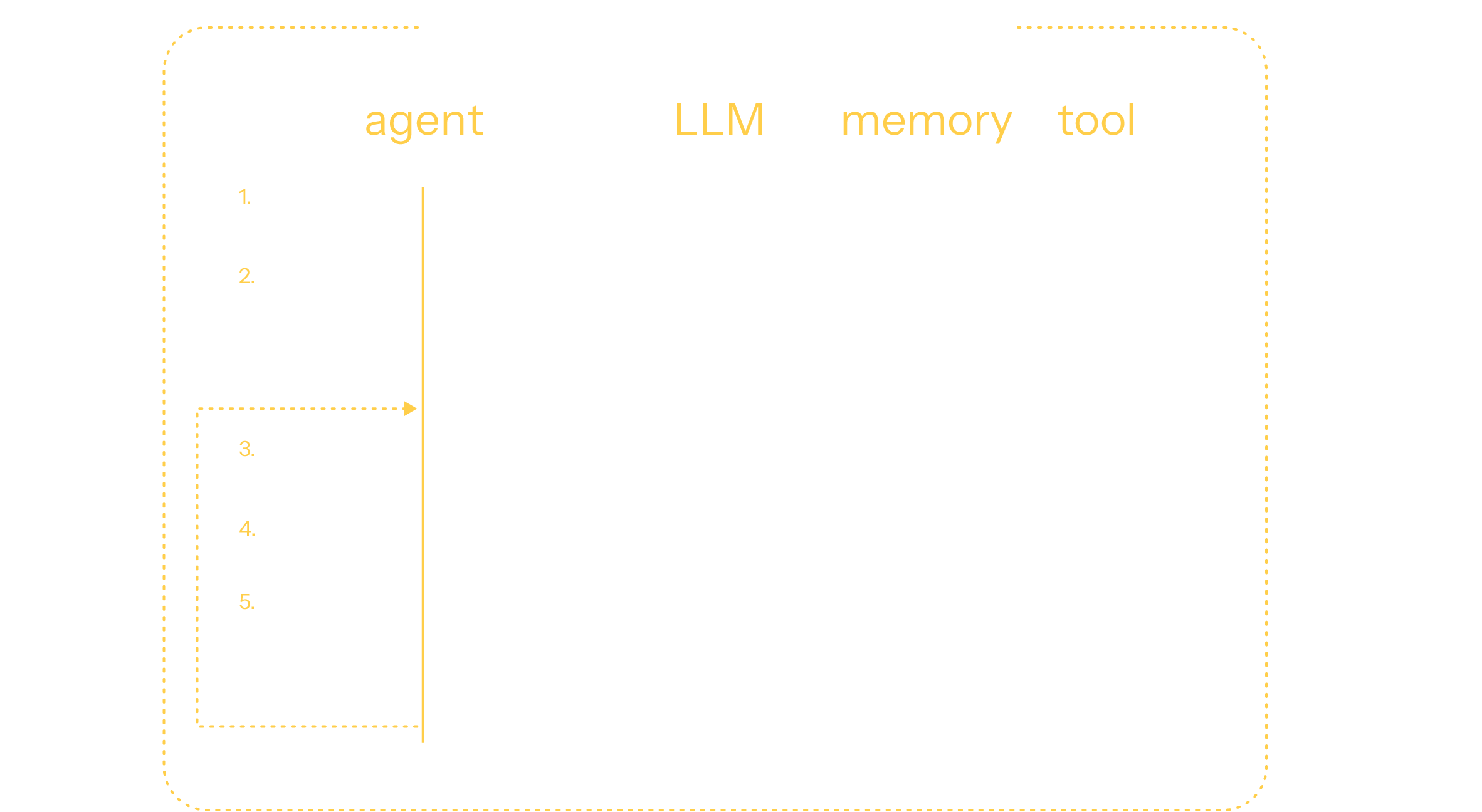

Agentic enrichment loop

AI agents use the agentic enrichment loop to iteratively improve their reasoning. The loop begins with an initial prompt1, which is sent to a large language model2. The output is processed3 and the output is used to refine the original prompt4. The results of this response can be sent to the large language model again5. Steps 3 - 5 continue—augmenting, generating, and refining—until the result meets the desired standard of quality.

Agentic workflow patterns

Fundamentally, agents combine the agentic enrichment loop in different workflow patterns. The following are some common agentic workflow patterns.

Used for tasks that can be decomposed into a linear sequence of smaller subtasks. For example: write a blog post about a given topic, and then translate to French.

Used to route a given task to a model that is best suited for a given task. For example: classify a given task and route to the technical model or the sales model.

Used to break a task into independent subtasks that run simultaneously. This is commonly used to get a diverse set of outputs to improve quality, or to improve performance. For example: execute security tests from different personas.

Used to break down tasks into smaller subtasks when the specific task is not known in advance. In this workflow, an orchestrator LLM dynamically decomposes tasks not known in advance, delegates them to worker LLMs, and then synthesizes the results. For example, gather and analyze information from a set of sources identified by the orchestrator LLM, which are then synthesized into an answer.

Used to create and execute a complex plan while staying grounded with human feedback. For example, create a travel itinerary and book all reservations for a vacation.

Additional resources

Building a real-time video AI service with Google Gemini

A blueprint for agentic AI services