Agentic AI Systems

Why is agentic important?

Agentic systems mark the beginning of a new era in computing—one where software doesn’t just follow instructions, but actively learns, adapts, and takes initiative. These systems help businesses move faster, get more done, and deliver more personalized experiences at scale. By putting autonomous agents to work across teams, companies can boost productivity, accelerate decision making, and tailor services in real time. This shift from traditional software to agentic systems is driving exponential demand for compute and orchestration, as always-on AI agents become deeply embedded in day-to-day operations.

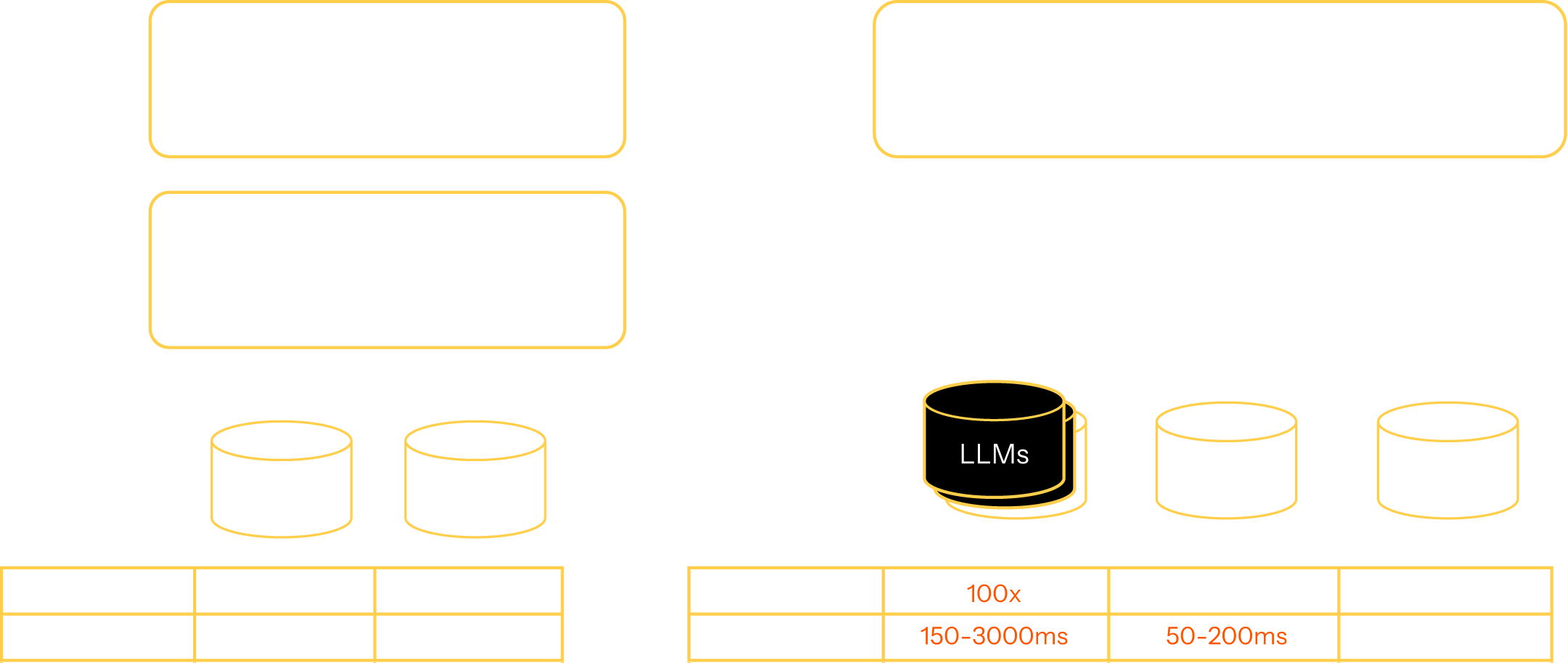

| Mainframe | Web | Cloud | Mobile | Agentic | |

|---|---|---|---|---|---|

| Users | thousands | millions | 10 millions | billions | trillions |

| TPS | 100 | 500 | 2,500 | 10,000 | 1,000,000 |

| 5x | 5x | 4x | 100x | ||

What is an agentic AI system?

An agentic AI system is a collection of agents that reason, plan, and act autonomously – while remaining governed and controlled.

An agent is an AI-powered component capable of making decisions, taking actions, and adapting to context in pursuit of a goal. Agentic systems combine multiple agents to solve complex tasks collaboratively—sharing context, delegating work, and responding to dynamic environments.

Unlike traditional AI assistants that follow predefined workflows or respond to user prompts, agentic systems are proactive, goal-driven, and capable of coordinating across tools, data sources, and constraints—while staying aligned with human intent.

Architecturally, agentic AI systems are orchestrated services that maintain conversational context, manage state across interactions, and dynamically adapt to evolving user inputs and environmental interactions. They require seamless coordination between AI models, business rules, data sources, and real-time events — all while preserving long-term memory and intent.

Key properties of agentic AI systems

Traditional, transaction-centered applications are optimized for short, stateless interactions such as clicking a button or submitting a form. Agentic AI systems are conversation-centered, and need to optimize for long-lived, stateful interactions such as ongoing dialogue or multi-step reasoning.

Conversational

Maintain long-term context and history across interactions.

Autonomous

Operate independently, pursuing goals with minimal human input.

Composeable

Combine agents, functions, and logic into adaptable workflows.

Fault-tolerant and recoverable

Handle errors gracefully, recover state, and guarantee execution even in adverse conditions.

Event-driven

Actions are initiated by external signals.

Streaming

Continuously process a stream of inputs (e.g., video, text, audio).

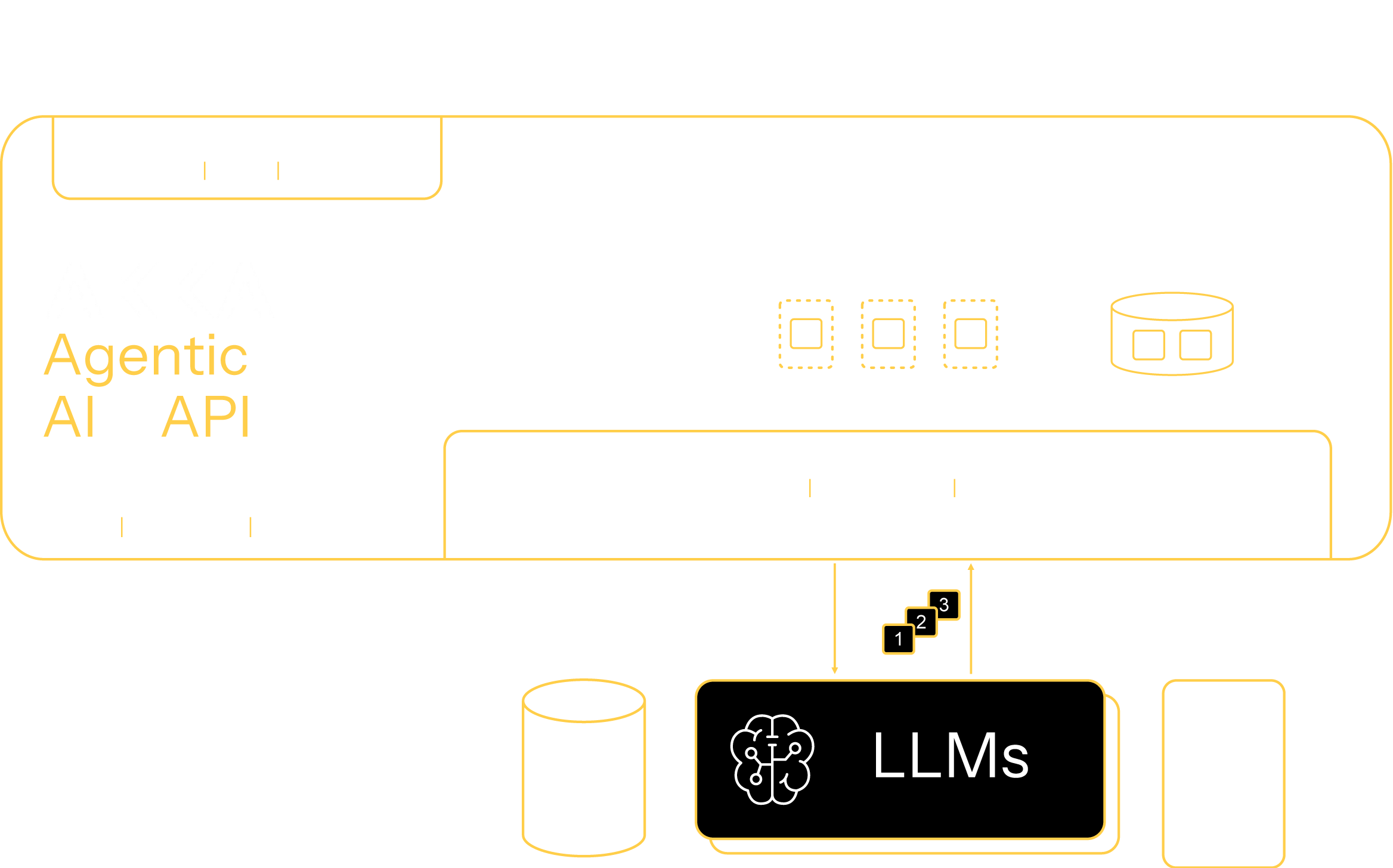

Akka Platform for agentic AI systems

The Akka Platform enables developers to build highly efficient agentic AI systems that are elastic, agile, and resilient.

How Akka enables agentic AI systems

Agent lifecycle management

Automatically deploy agents across regions for maximum availability. Elastically scale agents on-demand. Optimize your architecture for maximum efficiency.

- Agent versioning

- Agent replay

- Event, workflow, and agent debugger

- No downtime agent upgrades

Agent orchestration

Define and manage complex, long-running agents. Integrated support for task coordination, managing state transitions, and durable execution.

- Event-driven runtime benchmarked to 10M TPS

- SDK with AI workflow component

- Serial, parallel, state machine, & human-in-the-loop flows

- Sub-tasking agents and multi-agent coordination

Context database

Efficiently store and retrieve relevant context of infinite length.

- Agentic sessions with infinite context

- Context snapshot pruning to avoid LLM token caps

- In-memory context sharding, load balancing, and traffic routing

- Multi-region context replication

- Replication filters for region-pinning user context data

- Embedded context per

Streaming endpoints

Continuously process video, audio, text and other inputs with unparalleled streaming capabilities.

- Shared compute: agentic co-execution with API services

- HTTP and gRPC custom API endpoints

- Custom protocols, media types, and edge deployments

- Real-time streaming ingest, benchmarked to over 1TB

Agent connectivity and adapters

Reliably connect to large language models, vector databases, and other systems, with automatic backpressure.

- Non-blocking, streaming LLM inference adapters with back pressure

- Multi-LLM selection

- LLM adapters & 100s of ML algos

- Agent-to-agent brokerless messaging

- 100s of 3rd party integrations

Developer experience

Akka provides a simple, yet highly expressive, SDK. Write business logic and optimize your AI quality, without worrying about efficiency, scale, or resilience.